Continual Learning in NLP: Tackling the Challenge of Catastrophic Forgetting

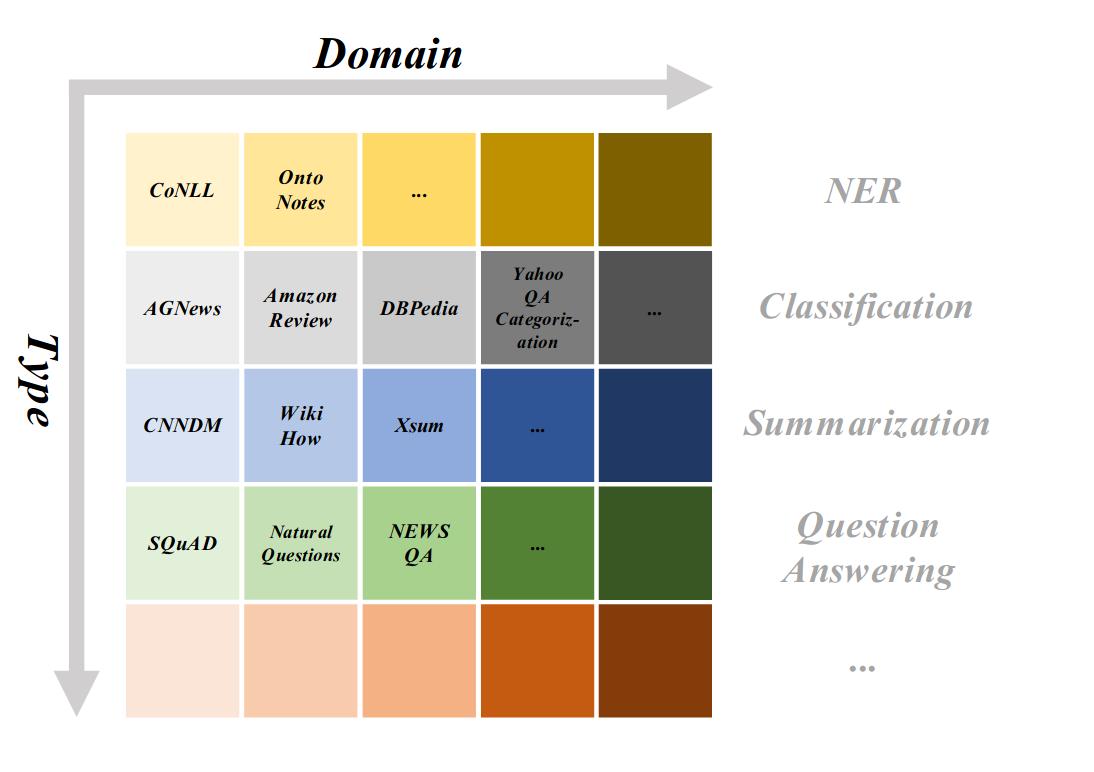

Introduction Machine learning models, particularly in Natural Language Processing (NLP), are becoming increasingly powerful. Yet, they suffer from a critical limitation: they forget. When trained on new tasks or domains, models often lose their ability to perform previously learned tasks—a phenomenon known as catastrophic forgetting. This problem becomes more pressing as NLP systems are expected to evolve alongside the ever-changing nature of human language. In my recent bachelor’s thesis, I explored how to mitigate catastrophic forgetting in NLP through continual learning....