Introduction

Machine learning models, particularly in Natural Language Processing (NLP), are becoming increasingly powerful. Yet, they suffer from a critical limitation: they forget. When trained on new tasks or domains, models often lose their ability to perform previously learned tasks—a phenomenon known as catastrophic forgetting. This problem becomes more pressing as NLP systems are expected to evolve alongside the ever-changing nature of human language.

In my recent bachelor’s thesis, I explored how to mitigate catastrophic forgetting in NLP through continual learning. The goal? To enable lifelong learning models that can adapt to new information while retaining past knowledge. This post summarizes the key insights and contributions of my research, which formed the basis of my bachelor thesis.

The Challenge of Continual Learning

Traditional NLP models follow a pretrain-then-finetune paradigm:

- Pretraining: The model learns general language patterns from a massive corpus.

- Finetuning: The model is adapted to a specific task (e.g., sentiment analysis or summarization).

Once fine-tuned, the model is frozen—it can no longer update its knowledge without being retrained on the full dataset. This is inefficient and costly, especially for large-scale transformer models.

Continual Learning (CL) offers an alternative. Instead of retraining from scratch, CL enables models to learn sequentially while retaining past knowledge. However, achieving this requires overcoming two major challenges:

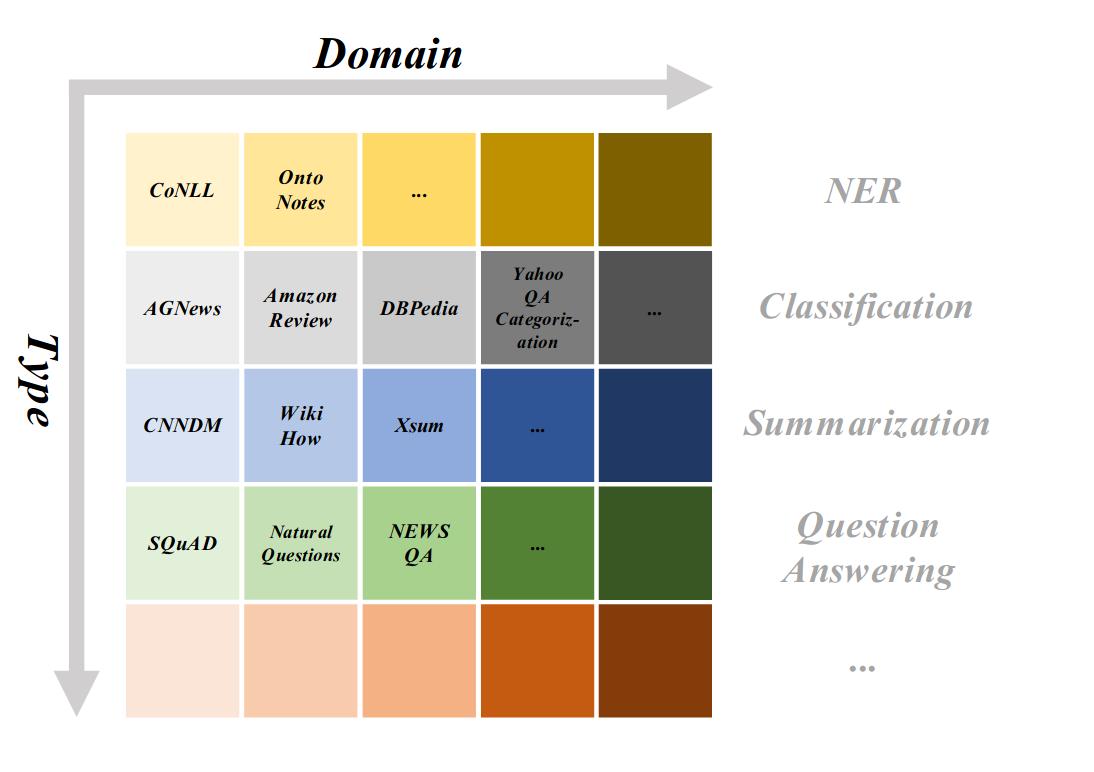

- Task-Incremental Learning (TIL): How can a model learn new NLP tasks without overwriting previous ones?

- Domain-Incremental Learning (DIL): How can a model generalize across different domains (e.g., social media vs. news articles) without task labels?

A New Framework for Continual Learning in NLP

To address these challenges, I developed a continual learning framework based on the T5 model (Text-to-Text Transfer Transformer). This framework integrates:

1. Adapter-Based Learning for Task-Incremental Learning

Instead of updating the entire model, I employed lightweight adapter modules—small task-specific components that can be swapped in and out for different tasks. The adapters evaluated include:

- Bottleneck Adapters (best-performing method)

- Low-Rank Adaptations (LoRA)

- Prefix-Tuning

By isolating task-specific learning to adapters, the core model remains stable while efficiently learning multiple tasks without forgetting.

2. Replay-Based Strategies for Domain-Incremental Learning

For domain adaptation, the framework implements replay-based continual learning, where past training data is revisited to reinforce memory. Two strategies were tested:

- Real Sampling: Storing and replaying actual past training samples.

- Pseudo Sampling: Using the model itself to generate synthetic samples from past domains.

Pseudo-rehearsal allows models to retain knowledge without explicitly storing past data, which is crucial for privacy and scalability.

Key Findings

The results of my bachelor thesis demonstrate:

- Bottleneck adapters achieved the best tradeoff between efficiency and performance.

- Generative replay (pseudo-rehearsal) proved effective in mitigating forgetting, though sample quality remains a challenge.

- The combination of adapter-based TIL and replay-based DIL provides a strong foundation for lifelong NLP models.

The Future of Continual NLP

As large language models (LLMs) continue to evolve, continual learning will become essential to keep them relevant without excessive retraining costs. The research in this field is still in its early stages, but the potential impact is enormous.

Imagine an NLP system that continuously improves over time, adapting to new dialects, emerging slang, and domain-specific knowledge—all while retaining everything it has learned before.

That is the future of AI.

Final Thoughts

If you’re interested in continual learning, you can check out the code for my framework here:

I’d love to hear your thoughts! Have you encountered catastrophic forgetting in your NLP projects? Let’s discuss in the comments or reach out on LinkedIn.

Thanks for reading! If you found this post insightful, consider sharing it with others in the AI community. 🚀